IMPORTANTThe views and opinions expressed in this article are entirely personal and do not reflect the official policy or position of any affiliated organizations or institutions.

“The best way to predict the future is to invent it.” – Alan Kay

Recent breakthroughs in neuroscience and neurotechnology have opened the door to something that once felt purely speculative: the ability to reshape human cognition and behavior through precise neural interventions. This is not science fiction anymore. Systems like Deep Brain Stimulation (DBS), Brain-Computer Interfaces (BCIs), and microelectrical stimulation are being tested—and in some cases deployed—for conditions ranging from Parkinson’s disease to depression. But what happens when these technologies go beyond treatment and begin to influence the fundamental architecture of how people think, feel, and act?

One such concept, which I’ve been reflecting on, is the NeuroShaper System (NSS)—a hypothetical but increasingly plausible neural modulation technology that combines real-time brain monitoring, adaptive stimulation, and AI-driven behavioral prediction. It draws on core elements of DBS, BCI, microelectrical stimulation, and machine learning to detect, analyze, and intervene in neural activity associated with emotion regulation, decision-making, and even moral behavior.

From Therapy to Correction: A Powerful Tool?

On a surface level, NSS holds immense promise. Imagine a system that could detect early signs of violent intent or emotional instability in real time, and apply subtle neural stimulation to regulate those impulses. For individuals suffering from PTSD, depression, or aggression-related disorders, this could offer relief where traditional methods fall short. The brain’s fear centers, like the amygdala, or reward systems, like the nucleus accumbens, can be precisely targeted to shift behavioral patterns in meaningful ways.

But therein lies the tension.

The Ethical Abyss: Who Defines “Desirable” Behavior?

As I delved deeper into this concept, my initial fascination was quickly shadowed by unease. If NSS can be used to reduce harmful behavior, who gets to define what “harmful” means? Does deviation from social norms become a target for correction? Could emotional volatility, political radicalism, or non-conforming behavior be categorized as risks to be “smoothed out”?

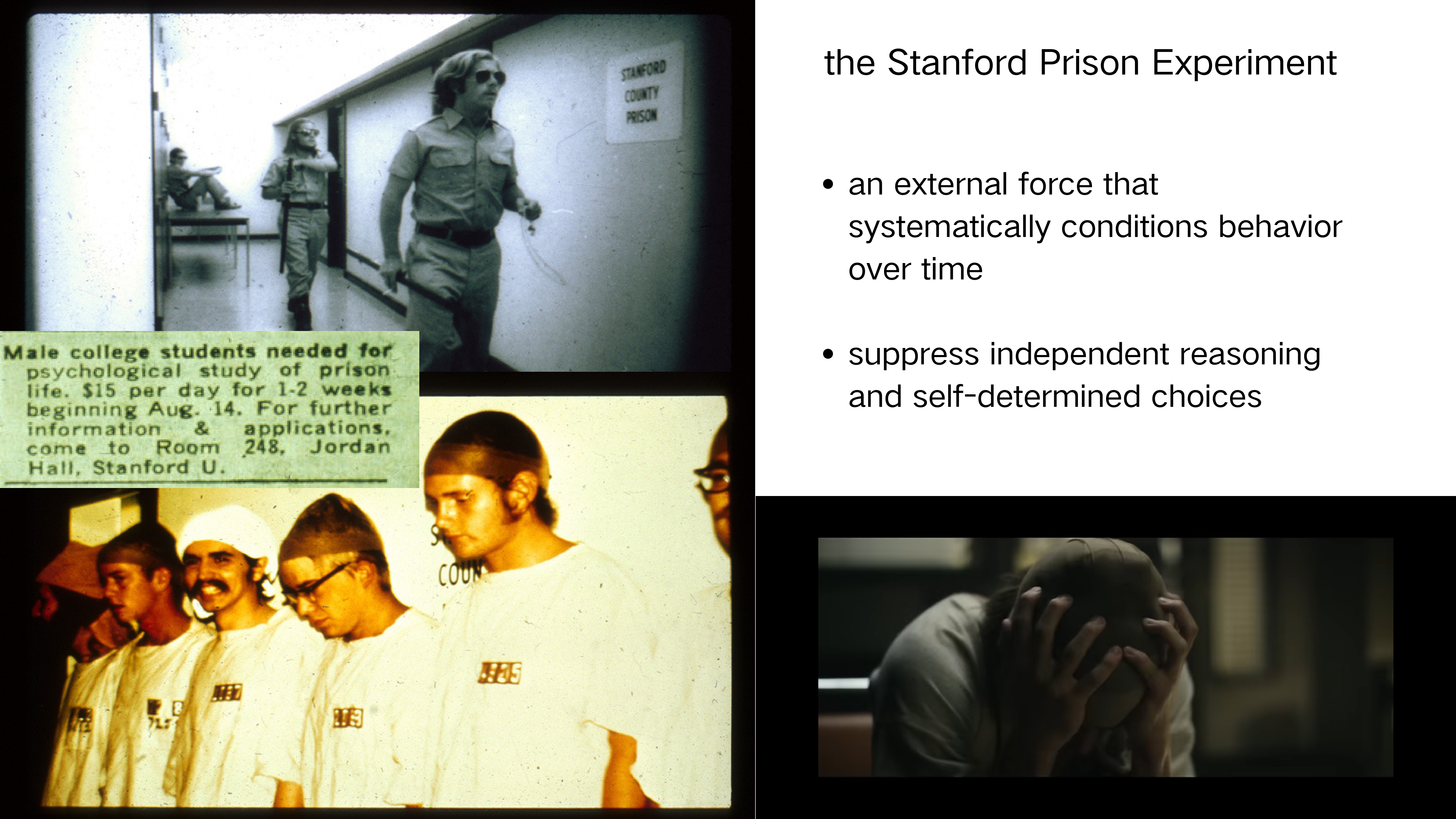

This leads to a darker possibility: behavioral optimization turning into behavioral standardization. When neural stimulation is used to reinforce specific behaviors repeatedly—especially under a framework inspired by Pavlovian conditioning—what remains of a person’s independent thought? Does the mind begin to conform not because it chooses to, but because the system silently nudges it into compliance?

The philosophical dilemma runs deep. Are we improving people, or programming them?

Privacy and the Ghost in the Machine

Another major concern is surveillance. A system that reads and responds to neural signals in real time must, by design, have constant access to the intimate workings of the human brain. Thoughts, intentions, even pre-conscious emotional states become data points.

Who stores this data? Who has access? Could governments, corporations, or even malicious actors use this system not just to guide behavior, but to manipulate or frame it? If someone’s neural data falsely flags them as “dangerous” or “unstable,” could that justify intervention—or even punishment?

It reminded me of the idea of “pre-emptive neural incitement crimes.” Imagine a world where someone is penalized not for what they did, but for what their brain patterns predicted they might do. This is not just a legal grey area—it’s a moral black hole.

A Slippery Path: From Support to Dependence

Even setting aside misuse, there’s the question of psychological dependency. If emotional regulation becomes an externally managed process—fine-tuned by AI and electrical pulses—what happens to our internal capacity for self-regulation? Could overreliance on such systems dull the very faculties they aim to support?

It’s possible that the human brain, if repeatedly relieved of the responsibility to manage its own emotional states, may slowly lose that ability. The long-term evolutionary implications of this are both fascinating and frightening. Technology that helps us cope may also, inadvertently, erode the very resilience it seeks to preserve.

The Bigger Picture: Society, Freedom, and the Technological Gaze

As I think about the broader impact of systems like NSS, one question keeps recurring: Are we creating tools for freedom—or frameworks for control?

This isn’t just a technical or medical issue—it’s a deeply societal one. When behavior can be shaped, whoever controls that shaping controls the rules of society itself. And when “normal” or “optimal” behavior is defined by algorithms, how long before dissent, resistance, or creativity becomes a kind of deviance?

History has shown us that systems of control rarely declare themselves openly. They arrive cloaked in the language of safety, health, and optimization. But beneath the polished rhetoric often lies a quiet compromise: the surrender of autonomy for the promise of order.

Final Thoughts: Choosing the Future We Want

Reflecting on technologies like NSS forces us to confront not just what is possible, but what is acceptable. Neurotechnology is rapidly evolving, and it may very well change how we think, feel, and live. But the deeper question is: Who decides how far we go?

We must be vigilant—not only about the capabilities of these tools but about the values they encode and the systems they serve. Balancing innovation with ethics is not optional. It’s essential.

Because the power to shape the brain is, quite literally, the power to shape the human experience.

And if we’re not careful, in building smarter technologies, we may forget what it means to be fully human.